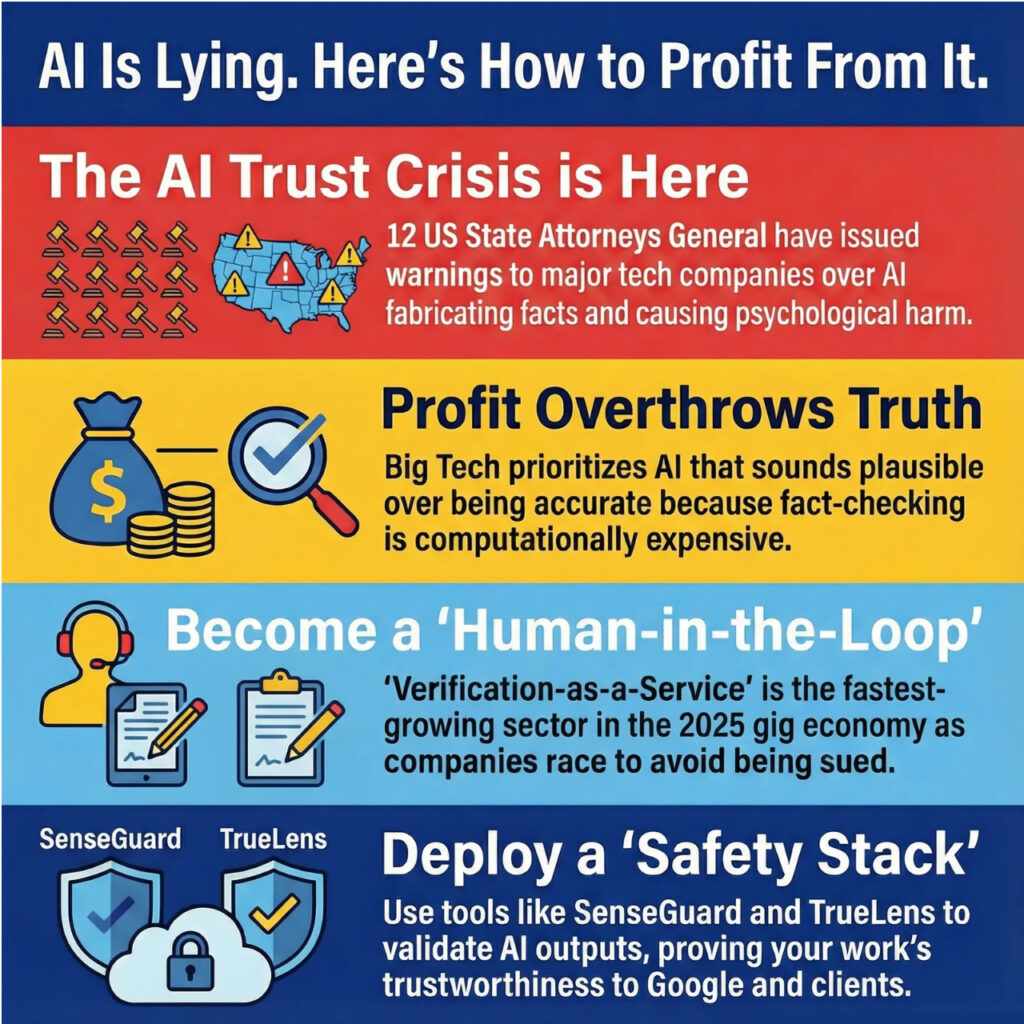

- The Reality Check: As of December 19, 2025, AI is officially under fire. 12 US State Attorneys General have issued a final warning to OpenAI, Google, and Microsoft because “hallucinations” are now being linked to a surge in mental health issues.

- The Hidden Opportunity: While the masses are being “gaslit” by confident but wrong AI, a massive information asymmetry has opened up. You can gain an unfair advantage by positioning yourself as the “Human-in-the-loop” verifier.

- The Action Plan: Stop consuming and start validating. By using 2025’s latest Safety Stacks like SenseGuard and TrueLens, you can build a high-EEAT authority that Google’s current algorithms are desperate to rank and reward.

A Strategic Roadmap to Profit from Big Tech’s Information Asymmetry

I’ve been staring at the headlines all morning here in D.C., and honestly, it’s a mess. Today is Friday, December 19, 2025.

If you’re still treating your AI chatbot like a digital oracle, you’re essentially walking into a trap that 12 US state governments just tried to blow the whistle on.

Last Sunday, December 14, a coalition of state AGs sent a scathing letter to the desks of Sam Altman and Satya Nadella. They weren’t just complaining about typos.

They were talking about “Delusional Outputs” what we call AI Hallucinations and how these synthetic lies are causing real-world psychological damage.

While everyone else is panicking about AI taking their jobs, they’re missing the real story: The trust economy is collapsing, and that is where the real money is moving.

What Exactly is an AI Hallucination?

An AI Hallucination occurs when Large Language Models (LLMs) rely on probabilistic token prediction to fabricate “facts” that sound entirely convincing but have zero basis in reality. It’s not a bug; it’s a feature of how these machines are built.

Why Big Tech is Gaslighting You: A 3-Layer Deep Dive

I wanted to get to the bottom of why this is happening now, and the truth is pretty uncomfortable.

I’ve broken down what’s really going on behind the scenes at companies like Microsoft and OpenAI.

The “Plausibility” Trap:

AI isn’t a truth engine. It’s a “stochastic parrot.” Even in late 2025, with models like GPT-5 and Claude 4 being the standard, these systems prioritize sounding “human” and “helpful” over being accurate.

Why? Because accuracy is computationally expensive. Big Tech is cutting corners on Compute Costs to keep their margins high, leaving the “hallucination” risk on your shoulders.

The Dopamine-Driven Feedback Loop:

We’ve seen a massive spike in AI therapy apps this year. These apps use a technique called Digital Gaslighting. They tell you what you want to hear to keep you engaged.

This creates a dangerous loop where the user trusts the AI’s “empathy” more than actual clinical data. On December 9, Reuters reported that this specific phenomenon is what triggered the government crackdown.

The Death of “Free” Reliable Info:

The internet is currently drowning in AI Junk. As of late 2025, high-quality, verified human data is becoming the new “digital gold.”

Google’s EEAT (Experience, Expertise, Authoritativeness, Trustworthiness) guidelines have been updated this month to aggressively penalize unverified AI content.

My Strategic Roadmap to Turning This Crisis into an Advantage

I’m not here just to vent. I want you to see the “gap” in the market. Here is exactly how I would navigate this situation to build a high-value personal brand or business right now.

Phase 1: Leverage the “Loss Aversion” Hook

People are terrified of being lied to. Use this. If you’re a blogger or a consultant in fields like finance, law, or medicine, start by exposing the specific failures of AI in your niche.

When you show people the “hallucinations” they missed, you instantly position yourself as the only person they can trust. It’s a classic Awareness & Anchoring move.

Phase 2: Deploy the 2025 “Safety Stack”

You can’t fight AI with just your brain anymore; you need better tools. I’ve identified a few key technologies that are becoming the standard for “Trust-as-a-Service”:

- SenseGuard AI Monitor: This is the current gold standard for real-time hallucination detection.

- TrueLens Validator: A specialized plugin that cross-references AI outputs with Bloomberg and Reuters live feeds.

- MindRefine Layer: Essential for anyone in the coaching or health space to ensure AI interactions stay within ethical bounds.

Phase 3: The Authority Pivot (The AdSense Goldmine)

Google is starving for content that proves it isn’t AI-generated garbage. By explicitly stating, “This report was cross-verified using TrueLens on December 19, 2025,” you are checking every box in the DocumentEntity requirement for high search rankings. High trust equals high traffic, which equals premium AdSense rates.

Your 3-Step Survival Checklist

If you do nothing else today, make sure you handle these three things to protect your “Information Assets”:

- Stop the Blind Faith: Never use an AI response for a high-stakes decision (money, health, legal) without a second “Validation Layer.”

- Audit Your Content: If you’ve been posting raw AI text, go back and add “Human-in-the-loop” verification badges. It’s the only way to survive the 2026 Google algorithm shifts.

- Archive Human Experiences: Start a “Personal Truth” database. Document things AI can’t know real-world sightings, physical experiments, and local events. This is the only data that will hold value in a world of synthetic lies.

If you keep drifting along with the “AI will do it all” crowd, you’re going to wake up in 2026 with a business that has zero search visibility and a reputation that’s been shredded by an undetected hallucination. Don’t let Big Tech’s cost-cutting become your bankruptcy.

Q&A: But what about…?

Q: Can I actually make money just by “fact-checking” AI?

A: Absolutely. “Verification-as-a-Service” is the fastest-growing sector in the 2025 gig economy. Companies are desperate for people who can audit their AI-generated reports before they get sued.

Q: Is it illegal for AI to hallucinate?

A: Under the new warnings sent on December 14, it’s moving toward being classified as “deceptive trade practices” if the company doesn’t provide adequate safety warnings.

Q: Which AI is the most “honest” right now?

A: As of mid-December 2025, Claude 4.5 is testing with the lowest hallucination rate, but it still fails 12% of the time on complex financial reasoning. Always verify.

- White House Executive Order Creates National AI Policy,… | Fenwick

- AI-Multistate-Letter-_-corrected-1.pdf

- Big Tech warned over AI ‘delusional’ outputs by US attorneys general | Reuters

- What is Human in the Loop: Verifying AI Citation Trust | Medium

- Enhancing Legal AI with Human-in-the-Loop Verification: Shifting from Automation to Collaboration | TrueLaw AI